This post explores whether, in supporting learners from disadvantaged backgrounds, educators should prioritise low attainers over high attainers, or give them equal priority.

This post explores whether, in supporting learners from disadvantaged backgrounds, educators should prioritise low attainers over high attainers, or give them equal priority.

Introduction

Last week I took umbrage at a blog post and found myself engaged in a Twitter discussion with the author, one Mr Thomas.

Put crudely, the discussion hinged on the question whether the educational needs of ‘poor but dim’ learners should take precedence over those of the ‘poor but bright’. (This is Mr Thomas’s shorthand, not mine.)

He argued that the ‘poor but dim’ are the higher priority; I countered that all poor learners should have equal priority, regardless of their ability and prior attainment.

We began to explore the issue:

- as a matter of educational policy and principle

- with reference to inputs – the allocation of financial and human resources between these competing priorities and

- in terms of outcomes – the comparative benefits to the economy and to society from investment at the top or the bottom of the attainment spectrum.

This post presents the discussion, adding more flesh and gloss from the Gifted Phoenix perspective.

It might or might not stimulate some interest in how this slightly different take on a rather hoary old chestnut plays out in England’s current educational landscape.

But I am particularly interested in how gifted advocates in different countries respond to these arguments. What is the consensus, if any, on the core issue?

Depending on the answer to this first question, how should gifted advocates frame the argument for educationalists and the wider public?

To help answer the first question I have included a poll at the end of the post.

Do please respond to that – and feel free to discuss the second question in the comments section below.

The structure of the post is fairly complex, comprising:

- A (hopefully objective) summary of Mr Thomas’s original post.

- An embedded version of the substance of our Twitter conversation. I have removed some Tweets – mostly those from third parties – and reordered a little to make this more accessible. I don’t believe I’ve done any significant damage to either case.

- Some definition of terms, because there is otherwise much cause for confusion as we push further into the debate.

- A digressionary exploration of the evidence base, dealing with attainment data and budget allocations respectively. The former exposes what little we are told about how socio-economic gaps vary across the attainment spectrum; the latter is relevant to the discussion of inputs. Those pressed for time may wish to proceed directly to…

- …A summing up, which expands in turn the key points we exchanged on the point of principle, on inputs and on outcomes respectively.

I have reserved until close to the end a few personal observations about the encounter and how it made me feel.

And I conclude with the customary brief summary of key points and the aforementioned poll.

It is an ambitious piece and I am in two minds as to whether it hangs together properly, but you are ultimately the judges of that.

What Mr Thomas Blogged

The post was called ‘The Romance of the Poor but Bright’ and the substance of the argument (incorporating several key quotations) ran like this:

- The ‘effort and resources, of schools but particularly of business and charitable enterprise, are directed disproportionately at those who are already high achieving – the poor but bright’.

- Moreover ‘huge effort is expended on access to the top universities, with great sums being spent to make marginal improvements to a small set of students at the top of the disadvantaged spectrum. They cite the gap in entry, often to Oxbridge, as a significant problem that blights our society.’

- This however is ‘the pretty face of the problem. The far uglier face is the gap in life outcomes for those who take least well to education.’

- ‘Popular discourse is easily caught up in the romance of the poor but bright’ but ‘we end up ignoring the more pressing problem – of students for whom our efforts will determine whether they ever get a job or contribute to society’. For ‘when did you last hear someone advocate for the poor but dim?’

- ‘The gap most damaging to society is in life outcomes for the children who perform least well at school.’ Three areas should be prioritised to improve their educational outcomes:

o Improving alternative provision (AP) which ‘operates as a shadow school system, largely unknown and wholly unappreciated’ – ‘developing a national network of high–quality alternative provision…must be a priority if we are to close the gap at the bottom’.

o Improving ‘consistency in SEN support’ because ‘schools are often ill equipped to cope with these, and often manage only because of the extraordinary effort of dedicated staff’. There is ‘inconsistency in funding and support between local authorities’.

o Introducing clearer assessment of basic skills, ‘so that a student could not appear to be performing well unless they have mastered the basics’.

- While ‘any student failing to meet their potential is a dreadful thing’, the educational successes of ‘students with incredibly challenging behaviour’ and ‘complex special needs…have the power to change the British economy, far more so than those of their brighter peers.’

A footnote adds ‘I do not believe in either bright or dim, only differences in epigenetic coding or accumulated lifetime practice, but that is a discussion for another day.’

Indeed it is.

Our ensuing Twitter discussion

The substance of our Twitter discussion is captured in the embedded version immediately below. (Scroll down to the bottom for the beginning and work your way back to the top.)

Tweets by @giftedphoenix

Defining Terms

Poor, Bright and Dim

I take poor to mean socio-economic disadvantage, as opposed to any disadvantage attributable to the behaviours, difficulties, needs, impairments or disabilities associated with AP and/or SEN.

I recognise of course that such a distinction is more theoretical than practical, because, when learners experience multiple causes of disadvantage, the educational response must be holistic rather than disaggregated.

Nevertheless, the meaning of ‘poor’ is clear – that term cannot be stretched to include these additional dimensions of disadvantage.

The available performance data foregrounds two measures of socio-economic disadvantage: current eligibility for and take up of free school meals (FSM) and qualification for the deprivation element of the Pupil Premium, determined by FSM eligibility at some point within the last 6 years (known as ‘ever-6’).

Both are used in this post. Distinctions are typically between the disadvantaged learners and non-disadvantaged learners, though some of the supporting data compares outcomes for disadvantaged learners with outcomes for all learners, advantaged and disadvantaged alike.

The gaps that need closing are therefore:

- between ‘poor and bright’ and other ‘bright’ learners (The Excellence Gap) and

- between ‘poor and dim’ and other ‘dim’ learners. I will christen this The Foundation Gap.

The core question is whether The Foundation Gap takes precedence over The Excellence Gap or vice versa, or whether they should have equal billing.

This involves immediate and overt recognition that classification as AP and/or SEN is not synonymous with the epithet ‘poor’, because there are many comparatively advantaged learners within these populations.

But such a distinction is not properly established in Mr Thomas’ blog, which applies the epithet ‘poor’ but then treats the AP and SEN populations as homogenous and somehow associated with it.

By ‘dim’ I take Mr Thomas to mean the lowest segment of the attainment distribution – one of his tweets specifically mentions ‘the bottom 20%’. The AP and/or SEN populations are likely to be disproportionately represented within these two deciles, but they are not synonymous with it either.

This distinction will not be lost on gifted advocates who are only too familiar with the very limited attention paid to twice exceptional learners.

Those from poor backgrounds within the AP and/or SEN populations are even more likely to be disproportionately represented in ‘the bottom 20%’ than their more advantaged peers, but even they will not constitute the entirety of ‘the bottom 20%’. A Venn diagram would likely show significant overlap, but that is all.

Hence disadvantaged AP/SEN are almost certainly a relatively poor proxy for the ‘poor but dim’.

That said I could find no data that quantifies these relationships.

The School Performance Tables distinguish a ‘low attainer’ cohort. (In the Secondary Tables the definition is determined by prior KS2 attainment and in the Primary Tables by prior KS1 attainment.)

These populations comprise some 15.7% of the total population in the Secondary Tables and about 18.0% in the Primary Tables. But neither set of Tables applies the distinction in their reporting of the attainment of those from disadvantaged backgrounds.

It follows from the definition of ‘dim’ that, by ‘bright’, MrThomas probably intends the two corresponding deciles at the top of the attainment distribution (even though he seems most exercised about the subset with the capacity to progress to competitive universities, particularly Oxford and Cambridge. This is a far more select group of exceptionally high attainers – and an even smaller group of exceptionally high attainers from disadvantaged backgrounds.)

A few AP and/or SEN students will likely fall within this wider group, fewer still within the subset of exceptionally high attainers. AP and/or SEN students from disadvantaged backgrounds will be fewer again, if indeed there are any at all.

The same issues with data apply. The School Performance Tables distinguish ‘high attainers’, who constitute over 32% of the secondary cohort and 25% of the primary cohort. As with low attainers, we cannot isolate the performance of those from disadvantaged backgrounds.

We are forced to rely on what limited data is made publicly available to distinguish the performance of disadvantaged low and high attainers.

At the top of the distribution there is a trickle of evidence about performance on specific high attainment measures and access to the most competitive universities. Still greater transparency is fervently to be desired.

At the bottom, I can find very little relevant data at all – we are driven inexorably towards analyses of the SEN population, because that is the only dataset differentiated by disadvantage, even though we have acknowledged that such a proxy is highly misleading. (Equivalent AP attainment data seems conspicuous by its absence.)

AP and SEN

Before exploring these datasets I ought to provide some description of the different programmes and support under discussion here, if only for the benefit of readers who are unfamiliar with the English education system.

Alternative Provision (AP) is intended to meet the needs of a variety of vulnerable learners:

‘They include pupils who have been excluded or who cannot attend mainstream school for other reasons: for example, children with behaviour issues, those who have short- or long-term illness, school phobics, teenage mothers, pregnant teenagers, or pupils without a school place.’

AP is provided in a variety of settings where learners engage in timetabled education activities away from their school and school staff.

Providers include further education colleges, charities, businesses, independent schools and the public sector. Pupil Referral Units (PRUs) are perhaps the best-known settings – there are some 400 nationally.

A review of AP was undertaken by Taylor in 2012 and the Government subsequently embarked on a substantive improvement programme. This rather gives the lie to Mr Thomas’ contention that AP is ‘largely unknown and wholly unappreciated’.

Taylor complains of a lack of reliable data about the number of learners in AP but notes that the DfE’s 2011 AP census recorded 14,050 pupils in PRUs and a further 23,020 in other settings on a mixture of full-time and part-time placements. This suggests a total of slightly over 37,000 learners, though the FTE figure is unknown.

He states that AP learners are:

‘…twice as likely as the average pupil to qualify for free school meals’

A supporting Equality Impact Assessment qualifies this somewhat:

‘In Jan 2011, 34.6% of pupils in PRUs and 13.8%* of pupils in other AP, were eligible for and claiming free school meals, compared with 14.6% of pupils in secondary schools. [*Note: in some AP settings, free school meals would not be available, so that figure is under-stated, but we cannot say by how much.]’

If the PRU population is typical of the wider AP population, approximately one third qualify under this FSM measure of disadvantage, meaning that the substantial majority are not ‘poor’ according to our definition above.

Taylor confirms that overall GCSE performance in AP is extremely low, pointing out that in 2011 just 1.4% achieved five or more GCSE grades A*-C including [GCSEs in] maths and English, compared to 53.4% of pupils in all schools.

By 2012/13 the comparable percentages were 1.7% and 61.7% respectively (the latter for all state-funded schools), suggesting an increasing gap in overall performance. This is a cause for concern but not directly relevant to the issue under consideration.

The huge disparity is at least partly explained by the facts that many AP students take alternative qualifications and that the national curriculum does not apply to PRUs.

Data is available showing the full range of qualifications pursued. Taylor recommended that all students in AP should continue to receive ‘appropriate and challenging English and Maths teaching’.

Interestingly, he also pointed out that:

‘In some PRUs and AP there is no provision for more able pupils who end up leaving without the GCSE grades they are capable of earning.’

However, he fails to offer a specific recommendation to address this point.

Special Educational Needs (SEN) are needs or disabilities that affect children’s ability to learn. These may include behavioural and social difficulties, learning difficulties or physical impairments.

This area has also been subject to a major Government reform programme now being implemented.

There is significant overlap between AP and SEN, with Taylor’s review of the former noting that the population in PRUs is 79% SEN.

We know from the 2013 SEN statistics that 12.6% of all pupils on roll at PRUs had SEN statements and 68.9% had SEN without statements. But these populations represent only a tiny proportion of the total SEN population in schools.

SEN learners also have higher than typical eligibility for FSM. In January 2013, 30.1% of all SEN categories across all primary, secondary and special schools were FSM-eligible, roughly twice the rate for all pupils. However, this means that almost seven in ten are not caught by the definition of ‘poor’ provided above.

In 2012/13 23.4% of all SEN learners achieved five or more GCSEs at A*-C or equivalent, including GCSEs in English and maths, compared with 70.4% of those having no identified SEN – another significant overall gap, but not directly relevant to our comparison of the ‘poor but bright’ and the ‘poor but dim’.

Data on socio-economic attainment gaps across the attainment spectrum

Those interested in how socio-economic attainment gaps vary at different attainment levels cannot fail to be struck by how little material of this kind is published, particularly in the secondary sector, when such gaps tend to increase in size.

One cannot entirely escape the conviction that this reticence deliberately masks some inconvenient truths.

- The ideal would be to have the established high/middle/low attainer distinctions mapped directly onto performance by advantaged/disadvantaged learners in the Performance Tables but, as we have indicated, this material is conspicuous by its absence. Perhaps it will appear in the Data Portal now under development.

- Our next best option is to examine socio-economic attainment gaps on specific attainment measures that will serve as decent proxies for high/middle/low attainment. We can do this to some extent but the focus is disproportionately on the primary sector because the Secondary Tables do not include proper high attainment measures (such as measures based exclusively on GCSE performance at grades A*/A). Maybe the Portal will come to the rescue here as well. We can however supply some basic Oxbridge fair access data.

- The least preferable option is deploy our admittedly poor proxies for low attainers – SEN and AP. But there isn’t much information from this source either.

The analysis below looks consecutively at data for the primary and secondary sectors.

Primary

We know, from the 2013 Primary School Performance Tables, that the percentage of disadvantaged and other learners achieving different KS2 levels in reading, writing and maths combined, in 2013 and 2012 respectively, were as follows:

Table 1: Percentage of disadvantaged and all other learners achieving each national curriculum level at KS2 in 2013 in reading, writing and maths combined

| L3 or below | L4 or above | L4B or above | L5 or above | |||||||||

| Dis | Oth | Gap | Dis | Oth | Gap | Dis | Oth | Gap | Dis | Oth | Gap | |

| 2013 | 13 | 5 | +8 | 63 | 81 | -18 | 49 | 69 | -20 | 10 | 26 | -16 |

| 2012 | x | x | x | 61 | 80 | -19 | x | x | x | 9 | 24 | -15 |

This tells us relatively little, apart from the fact that disadvantaged learners are heavily over-represented at L3 and below and heavily under-represented at L5 and above.

The L5 gap is somewhat lower than the gaps at L4 and 4B respectively, but not markedly so. However, the L5 gap has widened slightly since 2012 while the reverse is true at L4.

This next table synthesises data from SFR51/13: ‘National curriculum assessments at key stage 2: 2012 to 2013’. It also shows gaps for disadvantage, as opposed to FSM gaps.

Table 2: Percentage of disadvantaged and all other learners achieving each national curriculum level, including differentiation by gender, in each 2013 end of KS2 test

| L3 | L4 | L4B | L5 | L6 | ||||||||||||

| D | O | Gap | D | O | Gap | D | O | Gap | D | O | Gap | D | O | Gap | ||

| Reading | All | 12 | 6 | +6 | 48 | 38 | +10 | 63 | 80 | -17 | 30 | 50 | -20 | 0 | 1 | -1 |

| B | 13 | 7 | +6 | 47 | 40 | +7 | 59 | 77 | -18 | 27 | 47 | -20 | 0 | 0 | 0 | |

| G | 11 | 5 | +6 | 48 | 37 | +11 | 67 | 83 | -16 | 33 | 54 | -21 | 0 | 1 | -1 | |

| GPS | All | 28 | 17 | +11 | 28 | 25 | +3 | 52 | 70 | -18 | 33 | 51 | -18 | 1 | 2 | -1 |

| B | 30 | 20 | +10 | 27 | 27 | 0 | 45 | 65 | -20 | 28 | 46 | -18 | 0 | 2 | -2 | |

| G | 24 | 13 | +11 | 28 | 24 | +4 | 58 | 76 | -18 | 39 | 57 | -18 | 1 | 3 | -2 | |

| Maths | All | 16 | 9 | +7 | 50 | 41 | +9 | 62 | 78 | -16 | 24 | 39 | -15 | 2 | 8 | -6 |

| B | 15 | 8 | +7 | 48 | 39 | +9 | 63 | 79 | -16 | 26 | 39 | -13 | 3 | 10 | -7 | |

| G | 17 | 9 | +8 | 52 | 44 | +8 | 61 | 78 | -17 | 23 | 38 | -15 | 2 | 7 | -5 | |

This tells a relatively consistent story across each test and for boys as well as girls.

We can see that, at Level 4 and below, learners from disadvantaged backgrounds are in the clear majority, perhaps with the exception of L4 GPS. But at L4B and above they are very much in the minority.

Moreover, with the exception of L6 where low percentages across the board mask the true size of the gaps, disadvantaged learners tend to be significantly more under-represented at L4B and above than they are over-represented at L4 and below.

A different way of looking at this data is to compare the percentages of advantaged and disadvantaged learners respectively at L4 and L5 in each assessment.

- Reading: Amongst disadvantaged learners the proportion at L5 is -18 percentage points fewer than the proportion at L4, but amongst advantaged learners the proportion at L5 is +12 percentage points higher than at L4.

- GPS: Amongst disadvantaged learners the proportion at L5 is +5 percentage points more than the proportion at L4, but amongst advantaged learners the proportion at L5 is +26 percentage points higher than at L4.

- Maths: Amongst disadvantaged learners the proportion at L5 is -26 percentage points fewer than the proportion at L4, but amongst advantaged learners the proportion at L5 is only 2 percentage points fewer than at L4.

If we look at 2013 gaps compared with 2012 (with teacher assessment of writing included in place of the GSP test introduced in 2013) we can see there has been relatively little change across the board, with the exception of L5 maths, which has been affected by the increasing success of advantaged learners at L6.

Table 3: Percentage of disadvantaged and all other learners achieving national curriculum levels 3-6 in each of reading, writing and maths in 2012 and 2013 respectively

| L3 | L4 | L5 | L6 | ||||||||||

| D | O | Gap | D | O | Gap | D | O | Gap | D | O | Gap | ||

| Reading | 2012 | 11 | 6 | +5 | 46 | 36 | +10 | 33 | 54 | -21 | 0 | 0 | 0 |

| 2013 | 12 | 6 | +6 | 48 | 38 | +10 | 30 | 50 | -20 | 0 | 1 | -1 | |

| Writing | 2012 | 22 | 11 | +11 | 55 | 52 | +3 | 15 | 32 | -17 | 0 | 1 | -1 |

| 2013 | 19 | 10 | +9 | 56 | 52 | +4 | 17 | 34 | -17 | 1 | 2 | -1 | |

| Maths | 2012 | 17 | 9 | +8 | 50 | 41 | +9 | 23 | 43 | -20 | 1 | 4 | -3 |

| 2013 | 16 | 9 | +9 | 50 | 41 | +9 | 24 | 39 | -15 | 2 | 8 | -6 | |

To summarise, as far as KS2 performance is concerned, there are significant imbalances at both the top and the bottom of the attainment distribution and these gaps have not changed significantly since 2012. There is some evidence to suggest that gaps at the top are larger than those at the bottom.

Secondary

Unfortunately there is a dearth of comparable data at secondary level, principally because of the absence of published measures of high attainment.

SFR05/2014 provides us with FSM gaps (as opposed to disadvantaged gaps) for a series of GCSE measures, none of which serve our purpose particularly well:

- 5+ A*-C GCSE grades: gap = 16.0%

- 5+ A*-C grades including English and maths GCSEs: gap = 26.7%

- 5+ A*-G grades: gap = 7.6%

- 5+ A*-G grades including English and maths GCSEs: gap = 9.9%

- A*-C grades in English and maths GCSEs: gap = 26.6%

- Achieving the English Baccalaureate: gap = 16.4%

Perhaps all we can deduce is that the gaps vary considerably in size, but tend to be smaller for the relatively less demanding and larger for the relatively more demanding measures.

For specific high attainment measures we are forced to rely principally on data snippets released in answer to occasional Parliamentary Questions.

For example:

- In 2003, 1.0% of FSM-eligible learners achieved five or more GCSEs at A*/A including English and maths but excluding equivalents, compared with 6.8% of those not eligible, giving a gap of 5.8%. By 2009 the comparable percentages were 1.7% and 9.0% respectively, giving an increased gap of 7.3% (Col 568W)

- In 2006/07, the percentage of FSM-eligible pupils securing A*/A grades at GCSE in different subjects, compared with the percentage of all pupils in maintained schools doing so were as shown in the table below (Col 808W)

Table 4: Percentage of FSM-eligible and all pupils achieving GCSE A*/A grades in different GCSE subjects in 2007

| FSM | All pupils | Gap | |

| Maths | 3.7 | 15.6 | 11.9 |

| Eng lit | 4.1 | 20.0 | 15.9 |

| Eng lang | 3.5 | 16.4 | 12.9 |

| Physics | 2.2 | 49.0 | 46.8 |

| Chemistry | 2.5 | 48.4 | 45.9 |

| Biology | 2.5 | 46.8 | 44.3 |

| French | 3.5 | 22.9 | 19.4 |

| German | 2.8 | 23.2 | 20.4 |

- In 2008, 1% of FSM-eligible learners in maintained schools achieved A* in GCSE maths compared with 4% of all pupils in maintained schools. The comparable percentages for Grade A were 3% and 10% respectively, giving an A*/A gap of 10% (Col 488W)

There is much variation in the subject-specific outcomes at A*/A described above. But, when it comes to the overall 5+ GCSEs high attainment measure based on grades A*/A, the gap is much smaller than on the corresponding standard measure based on grades A*-C.

Further material of broadly the same vintage is available in a 2007 DfE statistical publication: ‘The Characteristics of High Attainers’.

There is a complex pattern in evidence here which is very hard to explain with the limited data available. More time series data of this nature – illustrating Excellence and Foundation Gaps alike – should be published annually so that we have a more complete and much more readily accessible dataset.

I could find no information at all about the comparative performance of disadvantaged learners in AP settings compared with those not from disadvantaged backgrounds.

Data is published showing the FSM gap for SEN learners on all the basic GCSE measures listed above. I have retained the generic FSM gaps in brackets for the sake of comparison:

- 5+ A*-C GCSE grades: gap = 12.5% (16.0%)

- 5+ A*-C grades including English and maths GCSEs: gap = 12.1% (26.7%)

- 5+ A*-G grades: gap = 10.4% (7.6%)

- 5+ A*-G grades including English and maths GCSEs: gap = 13.2% (9.9%)

- A*-C grades in English and maths GCSEs: gap = 12.3% (26.6%)

- Achieving the English Baccalaureate: gap = 3.5% (16.4%)

One can see that the FSM gaps for the more demanding measures are generally lower for SEN learners than they are for all learners. This may be interesting but, for the reasons given above, this is not a reliable proxy for the FSM gap amongst ‘dim’ learners.

When it comes to fair access to Oxbridge, I provided a close analysis of much relevant data in this post from November 2013.

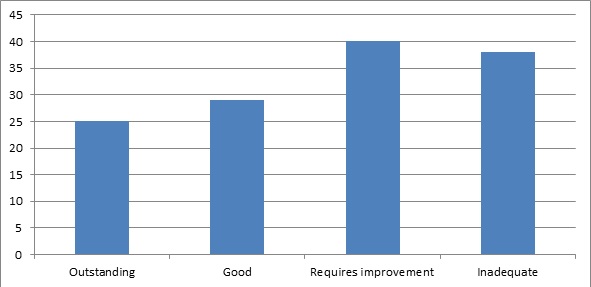

The chart below shows the number of 15 year-olds eligible for and claiming FSM at age 15 who progressed to Oxford or Cambridge by age 19. The figures are rounded to the nearest five.

Chart 1: FSM-eligible learners admitted to Oxford and Cambridge 2005/06 to 2010/11

In sum, there has been no change in these numbers over the last six years for which data has been published. So while there may have been consistently significant expenditure on access agreements and multiple smaller mentoring programmes, it has had negligible impact on this measure at least.

My previous post set out a proposal for what to do about this sorry state of affairs.

Budgets

For the purposes of this discussion we need ideally to identify and compare total national budgets for the ‘poor but bright’ and the ‘poor but dim’. But that is simply not possible.

Many funding streams cannot be disaggregated in this manner. As we have seen, some – including the AP and SEN budgets – may be aligned erroneously with the second of these groups, although they also support learners who are neither ‘poor’ nor ‘dim’ and have a broader purpose than raising attainment.

There may be some debate, too, about which funding streams should be weighed in the balance.

On the ‘bright but poor’ side, do we include funding for grammar schools, even though the percentage of disadvantaged learners attending many of them is virtually negligible (despite recent suggestions that some are now prepared to do something about this)? Should the Music and Dance Scheme (MDS) be within scope of this calculation?

The best I can offer is a commentary that gives a broad sense of orders of magnitude, to illustrate in very approximate terms how the scales tend to tilt more towards the ‘poor but dim’ rather than the ‘poor but bright’, but also to weave in a few relevant asides about some of the funding streams in question.

Pupil Premium and the EEF

I begin with the Pupil Premium – providing schools with additional funding to raise the attainment of disadvantaged learners.

The Premium is not attached to the learners who qualify for it, so schools are free to aggregate the funding and use it as they see fit. They are held accountable for these decisions through Ofsted inspection and the gap-narrowing measures in the Performance Tables.

Mr Thomas suggests in our Twitter discussion that AP students are not significant beneficiaries of such support, although provision in PRUs features prominently in the published evaluation of the Premium. It is for local authorities to determine how Pupil Premium funding is allocated in AP settings.

One might also make a case that ‘bright but poor’ learners are not a priority either, despite suggestions from the Pupil Premium Champion to the contrary.

As we have seen, the Performance Tables are not sharply enough focused on the excellence gaps at the top of the distribution and I have shown elsewhere that Ofsted’s increased focus on the most able does not yet extend to the impact on those attracting the Pupil Premium, even though there was a commitment that it would do so.

If there is Pupil Premium funding heading towards high attainers from disadvantaged backgrounds, the limited data to which we have access does not yet suggest a significant impact on the size of Excellence Gaps.

The ‘poor but bright’ are not a priority for the Education Endowment Foundation (EEF) either.

This 2011 paper explains that it is prioritising the performance of disadvantaged learners in schools below the floor targets. At one point it says:

‘Looking at the full range of GCSE results (as opposed to just the proportions who achieve the expected standards) shows that the challenge facing the EEF is complex – it is not simply a question of taking pupils from D to C (the expected level of attainment). Improving results across the spectrum of attainment will mean helping talented pupils to achieve top grades, while at the same time raising standards amongst pupils currently struggling to pass.’

But this is just after it has shown that the percentages of disadvantaged high attainers in its target schools are significantly lower than elsewhere. Other things being equal, the ‘poor but dim’ will be the prime beneficiaries.

It may now be time for the EEF to expand its focus to all schools. A diagram from this paper – reproduced below – demonstrates that, in 2010, the attainment gap between FSM and non-FSM was significantly larger in schools above the floor than in those below the floor that the EEF is prioritising. This is true in both the primary and secondary sectors.

It would be interesting to see whether this is still the case.

AP and SEN

Given the disaggregation problems discussed above, this section is intended simply to give some basic sense of orders of magnitude – lending at least some evidence to counter Mr Thomas’ assertion that the ‘effort and resources, of schools… are directed disproportionately at those who are already high achieving – the poor but bright’.

It is surprisingly hard to get a grip on the overall national budget for AP. A PQ from early 2011 (Col 75W) supplies a net current expenditure figure for all English local authorities of £530m.

Taylor’s Review fails to offer a comparable figure but my rough estimates based on the per pupil costs he supplies suggests a revenue budget of at least £400m. (Taylor suggests average per pupil costs of £9,500 per year for full-time AP, although PRU places are said to cost between £12,000 and £18,000 per annum.)

I found online a consultation document from Kent – England’s largest local authority – stating its revenue costs at over £11m in FY2014-15. Approximately 454 pupils attended Kent’s AP/PRU provision in 2012-13.

There must also be a significant capital budget. There are around 400 PRUs, not to mention a growing cadre of specialist AP academies and free schools. The total capital cost of the first AP free school – Derby Pride Academy – was £2.147m for a 50 place setting.

In FY2011-12, total annual national expenditure on SEN was £5.77 billion (Col 391W). There will have been some cost-cutting as a consequence of the latest reforms, but the order of magnitude is clear.

The latest version of the SEN Code of Practice outlines the panoply of support available, including the compulsory requirement that each school has a designated teacher to be responsible for co-ordinating SEN provision (the SENCO).

In short, the national budget for AP is sizeable and the national budget for SEN is huge. Per capita expenditure is correspondingly high. If we could isolate the proportion of these budgets allocated to raising the attainment of the ‘poor but dim’, the total would be substantial.

Fair Access, especially to Oxbridge, and some related observations

Mr Thomas refers specifically to funding to support fair access to universities – especially Oxbridge – for those from disadvantaged backgrounds. This is another area in which it is hard to get a grasp on total expenditure, not least because of the many small-scale mentoring projects that exist.

Mr Thomas is quite correct to remark on the sheer number of these, although they are relatively small beer in budgetary terms. (One suspects that they would be much more efficient and effective if they could be linked together within some sort of overarching framework.)

The Office for Fair Access (OFFA) estimates University access agreement expenditure on outreach in 2014-15 at £111.9m and this has to be factored in, as does DfE’s own small contribution – the Future Scholar Awards.

Were any expenditure in this territory to be criticised, it would surely be the development and capital costs for new selective 16-19 academies and free schools that specifically give priority to disadvantaged students.

The sums are large, perhaps not outstandingly so compared with national expenditure on SEN for example, but they will almost certainly benefit only a tiny localised proportion of the ‘bright but poor’ population.

There are several such projects around the country. Some of the most prominent are located in London.

The London Academy of Excellence (capacity 420) is fairly typical. It cost an initial £4.7m to establish plus an annual lease requiring a further £400K annually.

But this is dwarfed by the projected costs of the Harris Westminster Sixth Form, scheduled to open in September 2014. Housed in a former government building, the capital cost is reputed to be £45m for a 500-place institution.

There were reportedly disagreements within Government:

‘It is understood that the £45m cost was subject to a “significant difference of opinion” within the DfE where critics say that by concentrating large resources on the brightest children at a time when budgets are constrained means other children might miss out…

But a spokeswoman for the DfE robustly defended the plans tonight. “This is an inspirational collaboration between the country’s top academy chain and one of the best private schools in the country,” she said. “It will give hundreds of children from low income families across London the kind of top quality sixth-form previously reserved for the better off.”’

Here we have in microcosm the debate to which this post is dedicated.

One blogger – a London College Principal – pointed out that the real issue was not whether the brightest should benefit over others, but how few of the ‘poor but bright’ would do so:

‘£45m could have a transformative effect on thousands of 16-19 year olds across London… £45m could have funded at least 50 extra places in each college for over 10 years, helped build excellent new facilities for all students and created a city-wide network to support gifted and talented students in sixth forms across the capital working with our partner universities and employers.’

Summing Up

There are three main elements to the discussion: the point of principle, the inputs and the impact. The following sections deal with each of these in turn.

Principle

Put bluntly, should ‘poor but dim’ kids have higher priority for educators than ‘poor but bright’ kids (Mr Thomas’ position) or should all poor kids have equal priority and an equal right to the support they need to achieve their best (the Gifted Phoenix position)?

For Mr Thomas, it seems this priority is determined by whether – and how far – the learner is behind undefined ‘basic levels of attainment’ and/or mastery of ‘the basics’ (presumably literacy and numeracy).

Those below the basic attainment threshold have higher priority than those above it. He does not say so but this logic suggests that those furthest below the threshold are the highest priority and those furthest above are the lowest.

So, pursued to its logical conclusion, this would mean that the highest attainers would get next to no support while a human vegetable would be the highest priority of all.

However, since Mr Thomas’ focus is on marginal benefit, it may be that those nearest the threshold would be first in the queue for scarce resources, because they would require the least effort and resources to lift above it.

This philosophy drives the emphasis on achievement of national benchmarks and predominant focus on borderline candidates that, until recently, dominated our assessment and accountability system.

For Gifted Phoenix, every socio-economically disadvantaged learner has equal priority to the support they need to improve their attainment, by virtue of that disadvantage.

There is no question of elevating some ahead of others in the pecking order because they are further behind on key educational measures since, in effect, that is penalising some disadvantaged learners on the grounds of their ability or, more accurately, their prior attainment.

This philosophy underpins the notion of personalised education and is driving the recent and welcome reforms of the assessment and accountability system, designed to ensure that schools are judged by how well they improve the attainment of all learners, rather than predominantly on the basis of the proportion achieving the standard national benchmarks.

I suggested that, in deriding ‘the romance of the poor but bright’, Mr Thomas ran the risk of falling into ‘the slough of anti-elitism’. He rejected that suggestion, while continuing to emphasise the need to ‘concentrate more’ on ‘those at risk of never being able to engage with society’.

I have made the assumption that Thomas is interested primarily in KS2 and GCSE or equivalent qualifications at KS4 given his references to KS2 L4, basic skills and ‘paper qualifications needed to enter meaningful employment’.

But his additional references to ‘real qualifications’ (as opposed to paper ones) and engaging with society could well imply a wider range of personal, social and work-related skills for employability and adult life.

My preference for equal priority would apply regardless: there is no guarantee that high attainers from disadvantaged backgrounds will necessarily possess these vital skills.

But, as indicated in the definition above, there is an important distinction to be maintained between:

- educational support to raise the attainment, learning and employability skills of socio-economically disadvantaged learners and prepare them for adult life and

- support to manage a range of difficulties – whether behavioural problems, disability, physical or mental impairment – that impact on the broader life chances of the individuals concerned.

Such a distinction may well be masked in the everyday business of providing effective holistic support for learners facing such difficulties, but this debate requires it to be made and sustained given Mr Thomas’s definition of the problem in terms of the comparative treatment of the ‘poor but bright’ and the ‘poor but dim’.

Having made this distinction, it is not clear whether he himself sustains it consistently through to the end of his post. In the final paragraphs the term ‘poor but dim’ begins to morph into a broader notion encompassing all AP and SEN learners regardless of their socio-economic status.

Additional dimensions of disadvantage are potentially being brought into play. This is inconsistent and radically changes the nature of the argument.

Inputs

By inputs I mean the resources – financial and human – made available to support the education of ‘dim’ and ‘bright’ disadvantaged learners respectively.

Mr Thomas also shifts his ground as far as inputs are concerned.

His post opens with a statement that ‘the effort and resources’ of schools, charities and businesses are ‘directed disproportionately’ at the poor but bright – and he exemplifies this with reference to fair access to competitive universities, particularly Oxbridge.

When I point out the significant investment in AP compared with fair access, he changes tack – ‘I’m measuring outcomes not just inputs’.

Then later he says ‘But what some need is just more expensive’, to which I respond that ‘the bottom end already has the lion’s share of funding’.

At this point we have both fallen into the trap of treating the entirety of the AP and SEN budgets as focused on the ‘poor but dim’.

We are failing to recognise that they are poor proxies because the majority of AP and SEN learners are not ‘poor’, many are not ‘dim’, these budgets are focused on a wider range of needs and there is significant additional expenditure directed at ‘poor but dim’ learners elsewhere in the wider education budget.

Despite Mr Thomas’s opening claim, it should be reasonably evident from the preceding commentary that my ‘lion’s share’ point is factually correct. His suggestion that AP is ‘largely unknown and wholly unappreciated’ flies in the face of the Taylor Review and the Government’s subsequent work programme.

SEN may depend heavily on the ‘extraordinary effort of dedicated staff’, but at least there are such dedicated staff! There may be inconsistencies in local authority funding and support for SEN, but the global investment is colossal by comparison with the funding dedicated on the other side of the balance.

Gifted Phoenix’s position acknowledges that inputs are heavily loaded in favour of the SEN and AP budgets. This is as it should be since, as Thomas rightly notes, many of the additional services they need are frequently more expensive to provide. These services are not simply dedicated to raising their attainment, but also to tackling more substantive problems associated with their status.

Whether the balance of expenditure on the ‘bright’ and ‘dim’ respectively is optimal is a somewhat different matter. Contrary to Mr Thomas’s position, gifted advocates are often convinced that too much largesse is focused on the latter at the expense of the former.

Turning to advocacy, Mr Thomas says ‘we end up ignoring the more pressing problem’ of the poor but dim. He argues in the Twitter discussion that too few people are advocating for these learners, adding that they are failed ‘because it’s not popular to talk about them’.

I could not resist countering that advocacy for gifted learners is equally unpopular, indeed ‘the word is literally taboo in many settings’. I cannot help thinking – from his footnote reference to ‘epigenetic coding’ – that Mr Thomas is amongst those who are distinctly uncomfortable with the term.

Where advocacy does survive it is focused exclusively on progression to competitive universities and, to some extent, high attainment as a route towards that outcome. The narrative has shifted away from concepts of high ability or giftedness, because of the very limited consensus about that condition (even amongst gifted advocates) and even considerable doubt in some quarters whether it exists at all.

Outcomes

Mr Thomas maintains in his post that the successes of his preferred target group ‘have the power to change the British economy, far more so than those of their brighter peers’. This is because ‘the gap most damaging to society is in life outcomes for the children that perform least well at school’.

As noted above, it is important to remember that we are discussing here the addition of educational and economic value by tackling underachievement amongst learners from disadvantaged backgrounds, rather than amongst all the children that perform least well.

We are also leaving to one side the addition of value through any wider engagement by health and social services to improve life chances.

It is quite reasonable to advance the argument that ‘improving the outcomes ‘of the bottom 20%’ (the Tail) will have ‘a huge socio-economic impact’ and ‘make the biggest marginal difference to society’.

But one could equally make the case that society would derive similar or even higher returns from a decision to concentrate disproportionately on the highest attainers (the Smart Fraction).

Or, as Gifted Phoenix would prefer, one could reasonably propose that the optimal returns should be achieved by means of a balanced approach that raises both the floor and the ceiling, avoiding any arbitrary distinctions on the basis of prior attainment.

From the Gifted Phoenix perspective, one should balance the advantages of removing the drag on productivity of an educational underclass against those of developing the high-level human capital needed to drive economic growth and improve our chances of success in what Coalition ministers call the ‘global race’.

According to this perspective, by eliminating excellence gaps between disadvantaged and advantaged high attainers we will secure a stream of benefits broadly commensurate to that at the bottom end.

These will include substantial spillover benefits, achieved as a result of broadening the pool of successful leaders in political, social, educational and artistic fields, not to mention significant improvements in social mobility.

It is even possible to argue that, by creating a larger pool of more highly educated parents, we can also achieve a significant positive impact on the achievement of subsequent generations, thus significantly reducing the size of the tail.

And in the present generation we will create many more role models: young people from disadvantaged backgrounds who become educationally successful and who can influence the aspirations of younger disadvantaged learners.

This avoids the risk that low expectations will be reinforced and perpetuated through a ‘deficit model’ approach that places excessive emphasis on removing the drag from the tail by producing a larger number of ‘useful members of society’.

This line of argument is integral to the Gifted Phoenix Manifesto.

It seems to me entirely conceivable that economists might produce calculations to justify any of these different paths.

But it would be highly inequitable to put all our eggs in the ‘poor but bright’ basket, because that penalises some disadvantaged learners for their failure to achieve high attainment thresholds.

And it would be equally inequitable to focus exclusively on the ‘poor but dim’, because that penalises some disadvantaged learners for their success in becoming high attainers.

The more equitable solution must be to opt for a ‘balanced scorecard’ approach that generates a proportion of the top end benefits and a proportion of the bottom end benefits simultaneously.

There is a risk that this reduces the total flow of benefits, compared with one or other of the inequitable solutions, but there is a trade-off here between efficiency and a socially desirable outcome that balances the competing interests of the two groups.

The personal dimension

After we had finished our Twitter exchanges, I thought to research Mr Thomas online. Turns out he’s quite the Big-Cheese-in-Embryo. Provided he escapes the lure of filthy lucre, he’ll be a mover and shaker in education within the next decade.

I couldn’t help noticing his own educational experience – public school, a First in PPE from Oxford, leading light in the Oxford Union – then graduation from Teach First alongside internships with Deutsche Bank and McKinsey.

Now he’s serving his educational apprenticeship as joint curriculum lead for maths at a prominent London Academy. He’s also a trustee of ‘a university mentoring project for highly able 11-14 year old pupils from West London state schools’.

Lucky I didn’t check earlier. Such a glowing CV might have been enough to cow this grammar school Oxbridge reject, even if I did begin this line of work several years before he was born. Not that I have a chip on my shoulder…

The experience set me wondering about the dominant ideology amongst the Teach First cadre, and how it is tempered by extended exposure to teaching in a challenging environment.

There’s more than a hint of idealism about someone from this privileged background espousing the educational philosophy that Mr Thomas professes. But didn’t he wonder where all the disadvantaged people were during his own educational experience, and doesn’t he want to change that too?

His interest in mentoring highly able pupils would suggest that he does, but also seems directly to contradict the position he’s reached here. It would be a pity if the ‘poor but bright’ could not continue to rely on his support, equal in quantity and quality to the support he offers the ‘poor but dim’.

For he could make a huge difference at both ends of the attainment spectrum – and, with his undeniable talents, he should certainly be able to do so

Conclusion

We are entertaining three possible answers to the question whether in principle to prioritise the needs of the ‘poor but bright’ or the ‘poor but dim’:

- Concentrate principally – perhaps even exclusively – on closing the Excellence Gaps at the top

- Concentrate principally – perhaps even exclusively – on closing the Foundation Gaps at the bottom

- Concentrate equally across the attainment spectrum, at the top and bottom and all points in between.

Speaking as an advocate for those at the top, I favour the third option.

It seems to me incontrovertible – though hard to quantify – that, in the English education system, the lion’s share of resources go towards closing the Foundation Gaps.

That is perhaps as it should be, although one could wish that the financial scales were not tipped so excessively in their direction, for ‘poor but bright’ learners do in my view have an equal right to challenge and support, and should not be penalised for their high attainment.

Our current efforts to understand the relative size of the Foundation and Excellence Gaps and how these are changing over time are seriously compromised by the limited data in the public domain.

There is a powerful economic case to be made for prioritising the Foundation Gaps as part of a deliberate strategy for shortening the tail – but an equally powerful case can be constructed for prioritising the Excellence Gaps, as part of a deliberate strategy for increasing the smart fraction.

Neither of these options is optimal from an equity perspective however. The stream of benefits might be compromised somewhat by not focusing exclusively on one or the other, but a balanced approach should otherwise be in our collective best interests.

You may or may not agree. Here is a poll so you can register your vote. Please use the comments facility to share your wider views on this post.

Epilogue

We must beware the romance of the poor but bright,

But equally beware

The romance of rescuing the helpless

Poor from their sorry plight.

We must ensure the Tail

Does not wag the disadvantaged dog!

GP

May 2014

As I see it, there are three sets of issues with the ‘G’ word:

As I see it, there are three sets of issues with the ‘G’ word:

I shall not repeat here previous coverage of how Ofsted’s emphasis on the most able has been framed. Interested readers may wish to refer to previous posts for details:

I shall not repeat here previous coverage of how Ofsted’s emphasis on the most able has been framed. Interested readers may wish to refer to previous posts for details: