.

This is my annual analysis of high attainment and high attainers’ performance in the Secondary School and College Performance Tables

It draws on the 2014 Secondary and 16-18 Tables, as well as three statistical releases published alongside them:

It also reports trends since 2012 and 2013, while acknowledging the comparability issues at secondary level this year.

This is a companion piece to previous posts on:

- High attainment in the 2014 Primary Performance Tables (December 2014) and

The post opens with the headlines from the subsequent analysis. These are followed by a discussion of definitions and comparability issues.

Two substantive sections deal respectively with secondary and post-16 measures. The post-16 analysis focuses exclusively on A level results. There is a brief postscript on the performance of disadvantaged high attainers.

As ever I apologise in advance for any transcription errors and invite readers to notify me of any they spot, so that I can make the necessary corrections.

.

Headlines

At KS4:

- High attainers constitute 32.4% of the cohort attending state-funded schools, but this masks some variation by school type. The percentage attending converter academies (38.4%) has fallen by nine percentage points since 2011 but remains almost double the percentage attending sponsored academies (21.2%).

- Female high attainers (33.7%) continue to outnumber males (32.1%). The percentage of high-attaining males has fallen very slightly since 2013 while the proportion of high-attaining females has slightly increased.

- 88.8% of the GCSE cohort attending selective schools are high attainers, virtually unchanged from 2013. The percentages in comprehensive schools (30.9%) and modern schools (21.0%) are also little changed.

- These figures mask significant variation between schools. Ten grammar schools have a GCSE cohort consisting entirely of high attainers but, at the other extreme, one has only 52%.

- Some comprehensive schools have more high attainers than some grammars: the highest percentage recorded in 2014 by a comprehensive is 86%. Modern schools are also extremely variable, with high attainer populations ranging from 4% to 45%. Schools with small populations of high attainers report very different success rates for them on the headline measures.

- The fact that 11.2% of the selective school cohort are middle attainers reminds us that 11+ selection is not based on prior attainment. Middle attainers in selective schools perform significantly better than those in comprehensive schools, but worse than high attainers in comprehensives.

- 92.8% of high attainers in state-funded schools achieved 5 or more GCSEs at grades A*-C (or equivalent) including GCSEs in English and maths. While the success rate for all learners is down by four percentage points compared with 2013, the decline is less pronounced for high attainers (1.9 points).

- In 340 schools 100% of high attainers achieved this measure, down from 530 in 2013. Fifty-seven schools record 67% or less compared with only 14 in 2013. Four of the 57 had a better success rate for middle attainers than for high attainers.

- 93.8% of high attainers in state-funded schools achieved GCSE grades A*-C in English and maths. The success rate for high attainers has fallen less than the rate for the cohort as a whole (1.3 points against 2.4 points). Some 470 schools achieved 100% success amongst their high attainers on this measure, down 140 compared with 2013. Thirty-eight schools were at 67% or lower compared with only 12 in 2013. Five of these boast a higher success rate for their middle attainers than their high attainers (and four are the same that do so on the 5+ A*-C including English and maths measure).

- 68.8% of high attainers were entered for the EBacc and 55% achieved it. The entry rate is up 3.8 percentage points and the success rate up 2.9 points compared with 2013. Sixty-seven schools entered 100% of their high attainers, but only five schools managed 100% success. Thirty-seven schools entered no high attainers at all and 53 had no successful high attainers.

- 85.6% of high attainers made at least the expected progress in English and 84.7% did so in maths. Both are down on 2013 but much more so in maths (3.1 percentage points) than in English (0.6 points).

- In 108 schools every high attainer made the requisite progress in English. In 99 schools the same was true of maths in 99 schools. Only 21 schools managed 100% success in both English and maths. At the other extreme there were seven schools in which 50% or fewer made expected progress in both English and maths. Several schools recording 50% or below in either English or maths did significantly better with their middle attainers.

- In sponsored academies one in four high attainers do not make the expected progress in maths and one in five do not do so in English. In free schools one in every five high attainers falls short in English as do one in six in maths.

At KS5:

- 11.9% of students at state-funded schools and colleges achieved AAB grades at A level or higher, with at least two in facilitating subjects. This is a slight fall compared with the 12.1% that did so in 2013. The best-performing state institution had a success rate of 83%.

- 14.1% of A levels taken in selective schools in 2014 were graded A* and 41.1% were graded A* or A. In selective schools 26.1% of the cohort achieved AAA or higher and 32.3% achieved AAB or higher with at least two in facilitating subjects.

- Across all schools, independent as well as state-funded, the proportion of students achieving three or more A level grades at A*/A is falling and the gap between the success rates of boys and girls is increasing.

- Boys are more successful than girls on three of the four high attainment measures, the only exception being the least demanding (AAB or higher in any subjects).

- The highest recorded A level point score per A level student in a state-funded institution in 2014 is 1430.1, compared with an average of 772.7. The lowest is 288.4. The highest APS per A level entry is 271.1 compared with an average of 211.2. The lowest recorded is 108.6.

Disadvantaged high attainers:

- On the majority of the KS4 headline measures gaps between FSM and non-FSM performance are increasing, even when the 2013 methodology is applied to control for the impact of the reforms affecting comparability. Very limited improvement has been made against any of the five headline measures between 2011 and 2014. It seems that the pupil premium has had little impact to date on either attainment or progress. Although no separate information is forthcoming about the performance of disadvantaged high attainers, it is highly likely that excellence gaps are equally unaffected.

.

Definitions and comparability issues

.

Definitions

The Secondary and 16-18 Tables take very different approaches, since the former deals exclusively with high attainers while the latter concentrates exclusively on high attainment.

The Secondary Tables define high attainers according to their prior attainment on end of KS2 tests. Most learners in the 2014 GCSE cohort will have taken these five years previously, in 2009.

The new supporting documentation describes the distinction between high, middle and low attainers thus:

- low attaining = those below level 4 in the key stage 2 tests

- middle attaining = those at level 4 in the key stage 2 tests

- high attaining = those above level 4 in the key stage 2 tests.

Last year the equivalent statement added:

‘To establish a pupil’s KS2 attainment level, we calculated the pupil’s average point score in national curriculum tests for English, maths and science and classified those with a point score of less than 24 as low; those between 24 and 29.99 as middle, and those with 30 or more as high attaining.’

This is now missing, but the methodology is presumably unchanged.

It means that high attainers will tend to be ‘all-rounders’, whose performance is at least middling in each assessment. Those who are exceptionally high achievers in one area but poor in others are unlikely to qualify.

There is nothing in the Secondary Tables or the supporting SFRs about high attainment, such as measures of GCSE achievement at grades A*/A.

By contrast, the 16-18 Tables do not distinguish high attainers, but do deploy a high attainment measure:

‘The percentage of A level students achieving grades AAB or higher in at least two facilitating subjects’

Facilitating subjects include:

‘biology, chemistry, physics, mathematics, further mathematics, geography, history, English literature, modern and classical languages.’

The supporting documentation says:

‘Students who already have a good idea of what they want to study at university should check the usual entry requirements for their chosen course and ensure that their choices at advanced level include any required subjects. Students who are less sure will want to keep their options open while they decide what to do. These students might want to consider choosing at least two facilitating subjects because they are most commonly required for entry to degree courses at Russell Group universities. The study of A levels in particular subjects does not, of course, guarantee anyone a place. Entry to university is competitive and achieving good grades is also important.’

The 2013 Tables also included percentages of students achieving three A levels at grades AAB or higher in facilitating subjects, but this has now been dropped.

The Statement of Intent for the 2014 Tables explains:

‘As announced in the government’s response to the consultation on 16-19 accountability earlier this year, we intend to maintain the AAB measure in performance tables as a standard of academic rigour. However, to address the concerns raised in the 16-19 accountability consultation, we will only require two of the subjects to be in facilitating subjects. Therefore, the indicator based on three facilitating subjects will no longer be reported in the performance tables.’

Both these measures appear in SFR03/15, alongside two others:

- Percentage of students achieving 3 A*-A grades or better At A level or applied single/double award A level.

- Percentage of students achieving grades AAB or better at A level or applied single/double award A level.

.

Comparability Issues

When it comes to analysis of the Secondary Tables, comparisons with previous years are compromised by changes to the way in which performance is measured.

Both SFRs carry an initial warning:

‘Two major reforms have been implemented which affect the calculation of key stage 4 (KS4) performance measures data in 2014:

- Professor Alison Wolf’s Review of Vocational Education recommendations which:

- restrict the qualifications counted

- prevent any qualification from counting as larger than one GCSE

- cap the number of non-GCSEs included in performance measures at two per pupil

- An early entry policy to only count a pupil’s first attempt at a qualification.’

SFR02/15 explains that some data has been presented ‘on two alternative bases’:

- Using the 2014 methodology with the changes above applied and

- Using a proxy 2013 methodology where the effect of these two changes has been removed.

It points out that more minor changes have not been accounted for, including the removal of unregulated IGCSEs, the application of discounting across different qualification types, the shift to linear GCSE formats and the removal of the speaking and listening component from English.

Moreover, the proxy measure does not:

‘…isolate the impact of changes in school behaviour due to policy changes. For example, we can count best entry results rather than first entry results but some schools will have adjusted their behaviours according to the policy changes and stopped entering pupils in the same patterns as they would have done before the policy was introduced.’

Nevertheless, the proxy is the best available guide to what outcomes would have been had the two reforms above not been introduced. Unfortunately, it has been applied rather sparingly.

Rather than ignore trends completely, this post includes information about changes in high attainers’ GCSE performance compared with previous years, not least so readers can see the impact of the changes that have been introduced.

It is important that we do not allow the impact of these changes to be used as a smokescreen masking negligible improvement or even declines in national performance on key measures.

But we cannot escape the fact that the 2014 figures are not fully comparable with those for previous years. Several of the tables in SFR06/2015 carry a warning in red to this effect (but not those in SFR 02/2015).

A few less substantive changes also impact slightly on the comparability of A level results: the withdrawal of January examinations and ‘automatic add back’ of students whose results were deferred from the previous year because they had not completed their 16-18 study programme.

.

Secondary outcomes

.

The High Attainer Population

The Secondary Performance Tables show that there were 172,115 high attainers from state-funded schools within the relevant cohort in 2014, who together account for 32.3% of the entire state-funded school cohort.

This is some 2% fewer than the 175,797 recorded in 2013, which constituted 32.4% of that year’s cohort.

SFR02/2015 provides information about the incidence of high, middle and low attainers by school type and gender.

Chart 1, below, compares the proportion of high attainers by type of school, showing changes since 2011.

The high attainer population across all state-funded mainstream schools has remained relatively stable over the period and currently stands at 32.9%. The corresponding percentage in LA-maintained mainstream schools is slightly lower: the difference is exactly two percentage points in 2014.

High attainers constitute only around one-fifth of the student population of sponsored academies, but close to double that in converter academies. The former percentage is relatively stable but the latter has fallen by some nine percentage points since 2011, presumably as the size of this sector has increased.

The percentage of high attainers in free schools is similar to that in converter academies but has fluctuated over the three years for which data is available. The comparison between 2014 and previous years will have been affected by the inclusion of UTCs and studio schools prior to 2014.

.

*Pre-2014 includes UTCs and studio schools; 2014 includes free schools only

Chart 1: Percentage of high attainers by school type, 2011-2014

.

Table 1 shows that, in each year since 2011, there has been a slightly higher percentage of female high attainers than male, the gap varying between 0.4 percentage points (2012) and 1.8 percentage points (2011).

The percentage of high-attaining boys in 2014 is the lowest it has been over this period, while the percentage of high attaining girls is slightly higher than it was in 2013 but has not returned to 2011 levels.

.

| Year | Boys | Girls |

| 2014 | 32.1 | 33.7 |

| 2013 | 32.3 | 33.3 |

| 2012 | 33.4 | 33.8 |

| 2011 | 32.6 | 34.4 |

Table 1: Percentage of high attainers by gender, all state-funded mainstream schools 2011-14

.

Table 2 shows that the percentage of high attainers in selective schools is almost unchanged from 2013, at just under 89%. This compares with almost 31% in comprehensive schools, unchanged from 2013, and 21% in modern schools, the highest it has been over this period.

The 11.2% of learners in selective schools who are middle attainers remind us that selection by ability through 11-plus tests gives a somewhat different sample than selection exclusively on the basis of KS2 attainment.

.

| Year | Selective | Comprehensive | Modern |

| 2014 | 88.8 | 30.9 | 21.0 |

| 2013 | 88.9 | 30.9 | 20.5 |

| 2012 | 89.8 | 31.7 | 20.9 |

| 2011 | 90.3 | 31.6 | 20.4 |

Table 2: Percentage of high attainers by admissions practice, 2011-14

.

The SFR shows that these middle attainers in selective schools are less successful than their high attaining peers, and slightly less successful than high attainers in comprehensives, but they are considerably more successful than middle attaining learners in comprehensive schools.

For example, in 2014 the 5+ A*-C grades including English and maths measure is achieved by:

- 97.8% of high attainers in selective schools

- 92.2% of high attainers in comprehensive schools

- 88.1% of middle attainers in selective schools and

- 50.8% of middle attainers in comprehensive schools.

A previous post ‘The Politics of Selection: Grammar schools and disadvantage’ (November 2014) explored how some grammar schools are significantly more selective than others – as measured by the percentage of high attainers within their GCSE cohorts – and the fact that some comprehensives are more selective than some grammar schools.

This is again borne out by the 2014 Performance Tables, which show that 10 selective schools have a cohort consisting entirely of high attainers, the same as in 2013. Eighty-nine selective schools have a high attainer population of 90% or more.

However, five are at 70% or below, with the lowest – Dover Grammar School for Boys – registering only 52% high attainers.

By comparison, comprehensives such as King’s Priory School, North Shields and Dame Alice Owen’s School, Potters Bar record 86% and 77% high attainers respectively.

There is also huge variation in modern schools, from Coombe Girls’ in Kingston, at 45%, just seven percentage points shy of the lowest recorded in a selective school, to The Ellington and Hereson School, Ramsgate, at just 4%.

Two studio colleges say they have no high attainers at all, while 96 schools have 10% or fewer. A significant proportion of these are academies located in rural and coastal areas.

Even though results are suppressed where there are too few high attainers, it is evident that these small cohorts perform very differently in different schools.

Amongst those with a high attainer population of 10% or fewer, the proportion achieving:

- 5+ A*-C grades including English and maths varies from 44% to100%

- EBacc ranges from 0% to 89%

- expected progress in English varies between 22% and 100% and expected progress in maths between 27% and 100%.

.

5+ GCSEs (or equivalent) at A*-C including GCSEs in English and maths

The Tables show that:

- 92.8% of high attainers in state-funded schools achieved five or more GCSEs (or equivalent) including GCSEs in English and maths. This compares with 56.6% of all learners. Allowing of course for the impact of 2014 reforms, the latter is a full four percentage points down on the 2013 outcome. By comparison, the outcome for high attainers is down 1.9 percentage points, slightly less than half the overall decline. Roughly one in every fourteen high attainers fails to achieve this benchmark.

- 340 schools achieve 100% on this measure, significantly fewer than the 530 that did so in 2013 and the 480 managing this in 2012. In 2013, 14 schools registered 67% or fewer high attainers achieving this outcome, whereas in 2014 this number has increased substantially, to 57 schools. Five schools record 0%, including selective Bourne Grammar School, Lincolnshire, hopefully because of their choice of IGCSEs. Six more are at 25% or lower.

- Amongst the 57 schools recording 67% or lower, four achieved a higher success rate for their middle achievers. These were: Nicholas Chamberlaine Technology College, Fullbrook School, Wingfield Academy and Nottingham Girls Academy.

.

A*-C grades in GCSE English and maths

The Tables reveal that:

- 93.8% of high attainers in state-funded schools achieved A*-C grades in GCSE English and maths, compared with 58.9% of all pupils. The latter percentage is down by 2.4 percentage points but the former has fallen by only 1.3 percentage points. Roughly one in 16 high attainers fails to achieve this measure.

- In 2014 the number of schools with 100% of high attainers achieving this measure has fallen to some 470, 140 fewer than in 2013 and 60 fewer than in 2012. There were 38 schools recording 67% or lower, a significant increase compared with 12 in 2013 and 18 in 2012. Of these, four are listed at 0% (Bourne Grammar is at 1%) and five more are at 25% or lower.

- Amongst the 38 schools recording 67% or lower, five return a higher success rate for their middle attainers than for their high attainers. Four of these are the same that do so on the 5+ A*-C measure above. They are joined by Tong High School.

.

Entry to and achievement of the EBacc

The Tables indicate that:

- 68.8% of high attainers in state-funded schools were entered for all EBacc subjects and 55.0% achieved the EBacc. The entry rate is up by 3.8 percentage points compared with 2013, and the success rate is up by 2.9 percentage points. By comparison, 31.5% of middle attainers were entered (up 3.7 points) and 12.7% passed (up 0.9 points). Between 2012 and 2013 the entry rate for high attainers increased by 19 percentage points, so the rate of improvement has slowed significantly. Given the impending introduction of the Attainment 8 measure, commitment to the EBacc is presumably waning.

- That said, 67 schools entered 100% of their high attainers for the EBacc, up from 47 in 2013. Success rates in these schools varied tremendously, from 25% to 100%. Only five schools achieved a 100% success rate for high attainers: King Solomon Academy, Preston Muslim Girls’ High School, Queen Elizabeth’s Barnet, Tauheedul Islam Girls’ High School and Yesodey Hatorah Senior Girls School. Four schools managed this in 2013 but only one, QEs Barnet, did so in both years.

- Thirty-seven schools entered no high attainers for the EBacc, compared with 55 in 2013 and 186 in 2012. Only 53 schools had no high attainers achieving the EBacc, compared with 79 in 2013 and 235 in 2012. Of these 53, 11 recorded a positive success rate for their middle attainers, though the difference was relatively small in all cases.

.

At least 3 Levels of Progress in English and maths

The Tables show that:

- Across all state-funded schools 85.6% of high attainers made at least the expected progress in English while 84.7% did so in maths. The corresponding figures for middle attainers are 70.2% in English and 65.3% in maths. Compared with 2013, the percentages for high attainers are down 0.6 percentage points in English and down 3.1 percentage points in maths, presumably because the first entry only rule has had more impact in the latter. Even allowing for the depressing effect of the changes outlined above, it is unacceptable that more than one in every seven high attainers fails to make the requisite progress in each of these core subjects, especially when the progress expected is relatively undemanding for such students.

- There were 108 schools in which every high attainer made at least the expected progress in English, exactly the same as in 2013. There were 99 schools which achieved the same outcome in maths, down significantly from 120 in 2013. In 2013 there were 36 schools which managed this in both English in maths, but only 21 did so in 2014.

- At the other extreme, four schools recorded no high attainers making the expected progress in English, presumably because of their choice of IGCSE. Sixty-five schools were at or below 50% on this measure. In maths 67 schools were at or below 50%, but the lowest recorded outcome was 16%, at Oasis Academy, Hextable.

- Seven schools were at or below 50% on both progress measures. The worst aggregate results were returned by Hull Studio School (18% in English; 24% in maths) and Manchester Creative and Media Academy for Boys (21% English and 29% maths). Both are now closed.

- Half of the schools achieving 50% or less with their high attainers in English or maths also returned better results with middle attainers. Particularly glaring differentials in English include Red House Academy (50% middle attainers and 22% high attainers) and Wingfield Academy (73% middle attainers; 36% high attainers). In maths the worst examples are Oasis Academy Hextable (55% middle attainers and 16% high attainers), Sir John Hunt Community Sports College (45% middle attainers and 17% high attainers) and Roseberry College and Sixth Form (now closed) (49% middle attainers and 21% high attainers).

.

Comparing achievement of these measures by school type and admissions basis

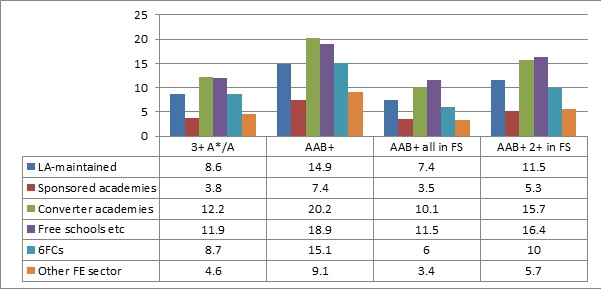

SFR02/2015 compares the performance of high attainers in different types of school on each of the five measures discussed above. This data is presented in Chart 2 below.

.

Chart 2: Comparison of high attainers’ GCSE performance by type of school, 2014

.

It shows that:

- There is significant variation on all five measures, though these are more pronounced for achievement of the EBacc, where there is a 20 percentage point difference between the success rates in sponsored academies (39.2%) and in converter academies (59.9%).

- Converter academies are the strongest performers across the board, while sponsored academies are consistently the weakest. LA-maintained mainstream schools out-perform free schools on four of the five measures, the only exception being expected progress in maths.

- Free schools and converter academies achieve stronger performance on progress in maths than on progress in English, but the reverse is true in sponsored academies and LA-maintained schools.

- Sponsored academies and free schools are both registering relatively poor performance on the EBacc measure and the two progress measures.

- One in four high attainers in sponsored academies fails to make the requisite progress in maths while one in five fail to do so in English. Moreover, one in five high attainers in free schools fails to make the expected progress in English and one in six in maths. This is unacceptably low.

Comparisons with 2013 outcomes show a general decline, with the exception of EBacc achievement.

This is particularly pronounced in sponsored academies, where there have been falls of 5.2 percentage points on 5+ A*-Cs including English and maths, 5.7 points on A*-C in English and maths and 4.7 points on expected progress in maths. However, expected progress in English has held up well by comparison, with a fall of just 0.6 percentage points.

Progress in maths has declined more than progress in English across the board. In converter academies progress in maths is down 3.1 points, while progress in English is down 1.1 points. In LA-maintained schools, the corresponding falls are 3.4 and 0.4 points respectively.

EBacc achievement is up by 4.5 percentage points in sponsored academies, 3.1 points in LA-maintained schools and 1.8 points in converter academies.

.

Comparing achievement of these measures by school admissions basis

SFR02/2015 compares the performance of high attainers in selective, comprehensive and modern schools on these five measures. Chart 3 illustrates these comparisons.

.

Chart 3: Comparison of high attainers’ GCSE performance by school admissions basis, 2014

.

It is evident that:

- High attainers in selective schools outperform those in comprehensive schools on all five measures. The biggest difference is in relation to EBacc achievement (21.6 percentage points). There is a 12.8 point advantage in relation to expected progress in maths and an 8.7 point advantage on expected progress in English.

- Similarly, high attainers in comprehensive schools outperform those in modern schools. They enjoy a 14.7 percentage point advantage in relation to achievement of the EBacc, but, otherwise, the differences are between 1.6 and 3.5 percentage points.

- Hence there is a smaller gap, by and large, between the performance of high attainers in modern and comprehensive schools respectively than there is between high attainers in comprehensive and selective schools respectively.

- Only selective schools are more successful in achieving expected progress in maths than they are in English. It is a cause for some concern that, even in selective schools, 6.5% of pupils are failing to make at least three levels of progress in English.

Compared with 2013, results have typically improved in selective schools but worsened in comprehensive and modern schools. For example:

- Achievement of the 5+ GCSE measure is up 0.5 percentage points in selective schools but down 2.3 points in comprehensives and modern schools.

- In selective schools, the success rate for expected progress in English is up 0.5 points and in maths it is up 0.4 points. However, in comprehensive schools progress in English and maths are both down, by 0.7 points and 3.5 points respectively. In modern schools, progress in English is up 0.3 percentage points while progress in maths is down 4.1 percentage points.

When it comes to EBacc achievement, the success rate is unchanged in selective schools, up 3.1 points in comprehensives and up 5 points in modern schools.

.

Other measures

The Secondary Performance Tables also provide information about the performance of high attainers on several other measures, including:

- Average Points Score (APS): Annex B of the Statement of Intent says that, as in 2013, the Tables will include APS (best 8) for ‘all qualifications’ and ‘GCSEs only’. At the time of writing, only the former appears in the 2014 Tables. For high attainers, the APS (best 8) all qualifications across all state-funded schools is 386.2, which compares unfavourably with 396.1 in 2013. Four selective schools managed to exceed 450 points: Pate’s Grammar School (455.1); The Tiffin Girls’ School (452.1); Reading School (451.4); and Colyton Grammar School (450.6). The best result in 2013 was 459.5, again at Colyton Grammar School. At the other end of the table, only one school returns a score of under 250 for their high attainers, Pent Valley Technology College (248.1). The lowest recorded score in 2013 was significantly higher at 277.3.

- Value Added (best 8) prior attainment: The VA score for all state-funded schools in 2014 is 1000.3, compared with 1001.5 in 2013. Five schools returned a result over 1050, whereas four did so in 2013. The 2014 leaders are: Tauheedul Islam Girls School (1070.7); Yesodey Hatorah Senior Girls School (1057.8); The City Academy Hackney (1051.4); The Skinner’s School (1051.2); and Hasmonean High School (1050.9). At the other extreme, 12 schools were at 900 or below, compared with just three in 2013. The lowest performer on this measure is Hull Studio School (851.2).

- Average grade: As in the case of APS, the average grade per pupil per GCSE has not yet materialised. The average grade per pupil per qualification is supplied. Five selective schools return A*-, including Henrietta Barnett, Pate’s, Reading School, Tiffin Girls and Tonbridge Grammar. Only Henrietta Barnett and Pate’s managed this in 2013.

- Number of exam entries: Yet again we only have number of entries for all qualifications and not for GCSE only. The average number of entries per high attainer across state-funded schools is 10.4, compared with 12.1 in 2013. This 1.7 reduction is smaller than for middle attainers (down 2.5 from 11.4 to 8.9) and low attainers (down 3.7 from 10.1 to 6.4). The highest number of entries per high attainer was 14.2 at Gable Hall School and the lowest was 5.9 at The Midland Studio College Hinkley.

.

16-18: A level outcomes

.

A level grades AAB or higher in at least two facilitating subjects

The 16-18 Tables show that 11.9% of students in state-funded schools and colleges achieved AAB+ with at least two in facilitating subjects. This is slightly lower than the 12.1% recorded in 2013.

The best-performing state-funded institution is a further education college, Cambridge Regional College, which records 83%. The only other state-funded institution above 80% is The Henrietta Barnett School. At the other end of the spectrum, some 443 institutions are at 0%.

Table 3, derived from SFR03/2015, reveals how performance on this measure has changed since 2013 for different types of institution and, for schools with different admission arrangements.

.

| 2013 | 2014 | |

| LA-maintained school | 11.4 | 11.5 |

| Sponsored academy | 5.4 | 5.3 |

| Converter academy | 16.4 | 15.7 |

| Free school* | 11.3 | 16.4 |

| Sixth form college | 10.4 | 10 |

| Other FE college | 5.8 | 5.7 |

| Selective school | 32.4 | 32.3 |

| Comprehensive school | 10.7 | 10.5 |

| Modern school | 2 | 3.2 |

.

The substantive change for free schools will be affected by the inclusion of UTCs and studio schools in that line in 2013 and the addition of city technology colleges and 16-19 free schools in 2014.

Otherwise the general trend is slightly downwards but LA-maintained schools have improved very slightly and modern schools have improved significantly.

.

Other measures of high A level attainment

SFR03/15 provides outcomes for three other measures of high A level attainment:

- 3 A*/A grades or better at A level, or applied single/double award A level

- Grades AAB or better at A level, or applied single/double award A level

- Grades AAB or better at A level all of which are in facilitating subjects.

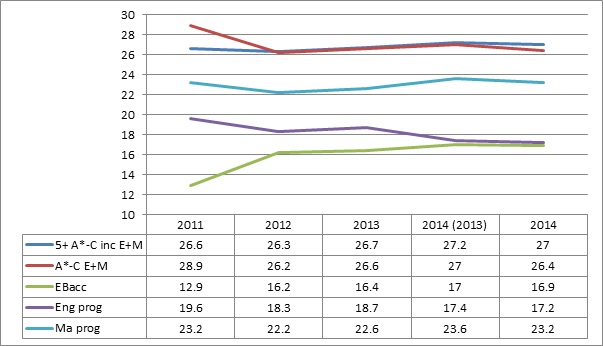

Chart 4, below, compares performance across all state-funded schools and colleges on all four measures, showing results separately for boys and girls.

Boys are in the ascendancy on three of the four measures, the one exception being AAB grades or higher in any subjects. The gaps are more substantial where facilitating subjects are involved.

.

Chart 4: A level high attainment measures by gender, 2014

.

The SFR provides a time series for the achievement of the 3+ A*/A measure, for all schools – including independent schools – and colleges. The 2014 success rate is 12.0%, down 0.5 percentage points compared with 2013.

The trend over time is shown in Chart 5 below. This shows how results for boys and girls alike are slowly declining, having reached their peak in 2010/11. Boys established a clear lead from that year onwards.

As they decline, the lines for boys and girls are steadily diverging since girls’ results are falling more rapidly. The gap between boys and girls in 2014 is 1.3 percentage points.

.

Chart 5: Achievement of 3+ A*/A grades in independent and state-funded schools and in colleges, 2006-2014

.

Chart 6, compares performance on the four different measures by institutional type. It shows a similar pattern across the piece.

Success rates tend to be highest in either converter academies or free schools, while sponsored academies and other FE institutions tend to bring up the rear. LA-maintained schools and sixth form colleges lie midway between.

Converter academies outscore free schools when facilitating subjects do not enter the equation, but the reverse is true when they do. There is a similar relationship between sixth form colleges and LA-maintained schools, but it does not quite hold with the final pair.

.

Chart 6: Proportion of students achieving different A level high attainment measures by type of institution, 2014

.

Chart 7 compares performance by admissions policy in the schools sector on the four measures. Selective schools enjoy a big advantage on all four. More than one in four selective school students achieving at least 3 A grades and almost one in 3 achieves AAB+ with at least two in facilitating subjects.

There is a broadly similar relationship across all the measures, in that comprehensive schools record roughly three times the rates achieved in modern schools and selective schools manage roughly three times the success rates in comprehensive schools.

.

Chart 7: Proportion of students achieving different A level high attainment measures by admissions basis in schools, 2014

.

Other Performance Table measures

Some of the other measures in the 16-18 Tables are relevant to high attainment:

- Average Point Score per A level student: The APS per student across all state funded schools and colleges is 772.7, down slightly on the 782.3 recorded last year. The highest recorded APS in 2014 is 1430.1, by Colchester Royal Grammar School. This is almost 100 ahead of the next best school, Colyton Grammar, but well short of the highest score in 2013, which was 1650. The lowest APS for a state-funded school in 2014 is 288.4 at Hartsdown Academy, which also returned the lowest score in 2013.

- Average Point Score per A level entry: The APS per A level entry for all state-funded institutions is 211.2, almost identical to the 211.3 recorded in 2013. The highest score attributable to a state-funded institution is 271.1 at The Henrietta Barnett School. This is very slightly slower than the 271.4 achieved by Queen Elizabeth’s Barnet in 2013. The lowest is 108.6, again at Hartsdown Academy, which exceeds the 2013 low of 97.7 at Appleton Academy.

- Average grade per A level entry: The average grade across state-funded schools and colleges is C. The highest average grade returned in the state-funded sector is A at The Henrietta Barnett School, Pate’s Grammar School, Queen Elizabeth’s Barnet and Tiffin Girls School. In 2013 only the two Barnet schools achieved the same outcome. At the other extreme, an average U grade is returned by Hartsdown Academy, Irlam and Cadishead College and Swadelands School.

- A level value-added: The highest value-added score amongst state-funded institutions is 0.56 registered by King James I Academy, Bishop Auckland . The lowest is -1.04 by The Gateway Academy. In 2013 the highest was 0.61 and the lowest -1.03.

SFR06/2015 also supplies the percentage of A* and A*/A grades by type of institution and schools’ admissions arrangements. The former is shown in Chart 8 and the latter in Chart 9 below.

The free school comparisons are affected by the changes to this category described above.

Elsewhere the pattern is rather inconsistent. Success rates at A* exceed those set in 2012 and 2013 in LA-maintained schools, sponsored academies, sixth form colleges and other FE institutions. Meanwhile, A*/A grades combined are lower than both 2012 and 2013 in converter academies and sixth form colleges.

.

Chart 8: A level A* and A*/A performance by institutional type, 2012 to 2014

.

Chart 9 shows A* performance exceeding the success rates for 2012 and 2013 in all three sectors.

When both grades are included, success rates in selective schools have returned almost to 2012 levels following a dip in 2013, while there has been little change across the three years in comprehensive schools and a clear improvement in modern schools, which also experienced a dip last year.

.

Chart 9: A level A* and A*/A performance in schools by admissions basis, 2012 to 2014.

.

Disadvantaged high attainers

There is nothing in either of the Performance Tables or the supporting SFRs to enable us to detect changes in the performance of disadvantaged high attainers relative to their more advantaged peers.

I dedicated a previous post to the very few published statistics available to quantify the size of these excellence gaps and establish if they are closing, stable or widening.

There is continuing uncertainty whether this will be addressed under the new assessment and accountability arrangements to be introduced from 2016.

Although results for all high attainers appear to be holding up better than those for middle and lower attainers, the evidence suggests that FSM and disadvantaged gaps at lower attainment levels are proving stubbornly resistant to closure.

Data from SFR06/2015 is presented in Charts 10-12 below.

Chart 10 shows that, when the 2014 methodology is applied, three of the gaps on the five headline measures increased in 2014 compared with 2013.

That might have been expected given the impact of the changes discussed above but, if the 2013 methodology is applied, so stripping out much (but not all) of the impact of these reforms, four of the five headline gaps worsened and the original three are even wider.

This seems to support the hypothesis that the reforms themselves are not driving this negative trend, athough Teach First has suggested otherwise.

.

Chart 10: FSM gaps for headline GCSE measures, 2013-2014

.

Chart 11 shows how FSM gaps have changed on each of these five measures since 2011. Both sets of 2014 figures are included.

Compared with 2011, there has been improvement on two of the five measures, while two or three have deteriorated, depending which methodology is applied for 2014.

Since 2012, only one measure has improved (expected progress in English) and that by slightly more or less than 1%, according to which 2014 methodology is selected.

Deteriorations have been small however, suggesting that FSM gaps have been relatively stable over this period, despite their closure being a top priority for the Government, backed up by extensive pupil premium funding.

.

Chart 11: FSM/other gaps for headline GCSE measures, 2011 to 2014.

.

Chart 12 shows a slightly more positive pattern for the gaps between disadvantaged learners (essentially ‘ever 6 FSM’ and looked after children) and their peers.

There have been improvements on four of the five headline measures since 2011. But since 2012, only one or two of the measures has improved, according to which 2014 methodology is selected. Compared with 2013, either three or four of the 2014 headline measures are down.

The application of the 2013 methodology in 2014, rather than the 2014 methodology, causes all five of the gaps to increase, so reinforcing the point in bold above.

It is unlikely that this pattern will be any different at higher attainment levels, but evidence to prove or disprove this remains disturbingly elusive.

.

Chart 12: Disadvantaged/other gaps for headline GCSE measures, 2011 to 2014

.

Taken together, this evidence does not provide a ringing endorsement of the Government’s strategy for closing these gaps.

There are various reasons why this might be the case:

- It is too soon to see a significant effect from the pupil premium or other Government reforms: This is the most likely defensive line, although it begs the question why more urgent action was/is discounted.

- Pupil premium is insufficiently targeted at the students/school that need it most: This is presumably what underlies the Fair Education Alliance’s misguided recommendation that pupil premium funding should be diverted away from high attaining disadvantaged learners towards their lower attaining peers.

- Schools enjoy too much flexibility over how they use the pupil premium and too many are using it unwisely: This might point towards more rigorous evaluation, tighter accountability mechanisms and stronger guidance.

- Pupil premium funding is too low to make a real difference: This might be advanced by institutions concerned at the impact of cuts elsewhere in their budgets.

- Money isn’t the answer: This might suggest that the pupil premium concept is fundamentally misguided and that the system as a whole needs to take a different or more holistic approach.

I have proposed a more targeted method of tackling secondary excellence gaps and simultaneously strengthening fair access, where funding topsliced from the pupil premium is fed into personal budgets for disadvantaged high attainers.

These would meet the cost of coherent, long-term personalised support programmes, co-ordinated by their schools and colleges, which would access suitable services from a ‘managed market’ of suppliers.

.

Conclusion

This analysis suggests that high attainers, particularly those in selective schools, have been relatively less affected by the reforms that have depressed GCSE results in 2014.

While we should be thankful for small mercies, three issues are of particular concern:

- There is a stubborn and serious problem with the achievement of expected progress in both English and maths. It cannot be acceptable that approximately one in seven high attainers fails to make three levels of progress in each core subject when this is a relatively undemanding expectation for those with high prior attainment. This issue is particularly acute in sponsored academies where one in four or five high attainers are undershooting their progress targets.

- Underachievement amongst high attainers is prevalent in far too many state-funded schools and colleges. At KS4 there are huge variations in the performance of high-attaining students depending on which schools they attend. A handful of schools achieve better outcomes with their middle attainers than with their high attainers. This ought to be a strong signal, to the schools as well as to Ofsted, that something serious is amiss.

- Progress in closing KS4 FSM gaps continues to be elusive, despite this being a national priority, backed up by a pupil premium budget of £2.5bn a year. In the absence of data about the performance of disadvantaged high attainers, we can only assume that this is equally true of excellence gaps.

.

GP

February 2015